Artificial Intelligence: history, status and prospects

The global debate on the potential, opportunities, role and risks arising from the adoption and deployment of Artificial Intelligence systems has at this time reached one of its highest points in intensity and amplitude. Yet, as many people know, this discipline, which periodically resurfaces in the news, has long-standing origins.

Without retracing a hundred-year history of attempts and research aimed at simulating the computational capabilities of the human brain (from Blaise Pascal, to Charles Babbage, to Alan Turing), we can place the birth of “modern” Artificial Intelligence in 1955, the year in which John MaCarthy coined precisely this term. In the following years, research and experiments were conducted in academia that laid the foundation for the first practical applications in the industry field (we are now in the 1980s). Then, the unstoppable evolution of this discipline in its declinations and connections (expert systems, neural networks, robotics, machine learning, generative AI…) as well as in the areas of concrete application happened in phases, at least in the eyes of the general public, of stasis and sudden acceleration.

Such as the current one, in which the appearance on the market of products and services based (either really or for marketing reasons) on AI and recently the ChatGPT phenomenon have overwhelmingly reignited the market’s attention and interest, as well as the one of society and institutions. AI technologies are now widespread in all sectors, used for business purposes and in everyday life. We use “smart” home devices, we interact with Customer Services supported by ChatBots. We find Artificial Intelligence, just to give a few examples, in banks to assess credit risk, in insurance companies to identify fraud, in service companies to analyze customer lifetime value and detect indications of abandonment, in public administration for automatic sorting to the appropriate offices of certified e-mail messages received. Yet, beyond these few examples, the areas of application and potential consumers are increasingly numerous: as always, market interest generates resources for research and development of new products and services, which in turn feed consumer expectations, in an evolutionary cycle that to date does not seem likely to be interrupted.

According to estimates by PwC, published in report “Sizing the price – PwC’s Global Artificial Intelligence Study: Exploiting the AI Revolution,” the global Artificial Intelligence market will see an annual growth of 37.3% between 2023 and 2030, reaching $1,811.8 billion in 2030. AI will have a positive impact on the global economy estimated at $15.7 trillion by 2030.” The June 2023 “S&P Global Market Intelligence report” predicts that the market for generative AI alone (the one of which Chat GPT is the best-known example) “will reach $3.7 billion in 2023 and reach $36.0 billion by 2028, growing at an annual rate of 58%.” According to McKinsey, these technologies “will be able to add the equivalent of $2.6 to $4.4 trillion a year to the global economy – for comparison, the total GDP of the United Kingdom in 2021 was $3.3 trillion”. In Italy, recent research by the “Artificial Intelligence” Observatory of the School of Management of the Politecnico di Milano notes that “The AI market in 2022 reached 500 million euros, with a growth of as much as 32% in a single year. … 61% of large Italian companies have already launched at least one AI project, 10 percentage points more than five years ago. … 93% of Italians know about AI, 58% consider it very present in daily life and 37% in working life. 73% of users have concerns about the impacts on their work.”

Artificial Intelligence: a resource to oversee

The last figure brings attention back to the debate about the impacts, both positive and negative, that a technology so widespread and with such projected growth may have on society and individuals. Among the risks that have long been feared are the more science-fictional ones about the rebellion of the machine against man and the concrete ones about employment, which, research tells us, are still an object of fear for many.

Then there are actual or potential impacts that are more related to the violation of rights. Let us give a couple of examples.

- The risk to a company or individual of not being granted a loan by a bank that did not grant it on the basis of a machine learning algorithm, without being able to explain to the applicant the reasons for rejection (explainability)

- The risk that a decision made by a company’s HR management at the suggestion of an AI system will have a negative outcome on the hiring of a candidate because this system was trained using data that induces a bias on certain categories (e.g., gender or ethnicity) (fairness – bias)

- The risk of an AI system being designed and used by an organization to induce behavior in its favor toward fragile or otherwise conditionable individuals (fairness).

The likelihood of the occurrence of events such as those listed above, and more, prove to be increasingly real the higher the criticality and number of AI systems managed by an organization. This consideration leads to the fact that it is now inescapable to set up and constantly exercise a defense over the AI assets at disposal and the related processes that govern its life cycle, which is capable of averting the above-mentioned risks.

It is a belief that even institutions at the global level are embracing.

Regulatory requirements: the AI Act

Specifically on our continent, the European Parliament in June 2023 gave the green light to the AI Act, a regulation that is expected to reach final approval by the end of the year and enter into force in 2024. It is a piece of legislation, with a particularly high penalty profile (it goes up to 7% of annual global turnover, more than the European Data Protection Regulation-GDPR), that will require organizations producing and using AI systems to take measures precisely to guard the AI portfolio.

In general terms, the Regulations stipulate that the use of Artificial Intelligence entails compliance with the following requirements, inspired by ethical principles:

- Implementation and human supervision

- Safety and Technical Robustness

- Privacy Compliance and Data Governance

- Transparency

- Diversity, Non-Discrimination and Fairness

- Social and Environmental well-being

- Attribution of responsibilities (Accountability).

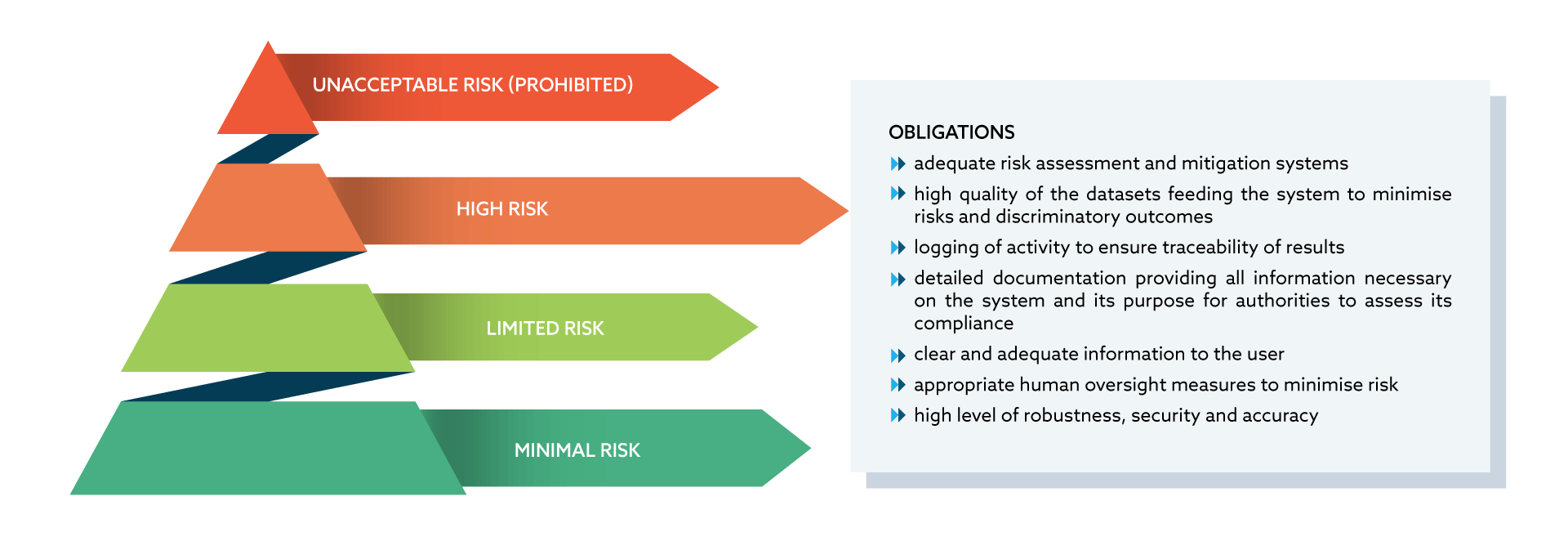

The AI governance model indicated by the Regulations is based on a risk-based approach that requires each AI system to be classified into one of the four levels shown in Figure 1.

Fig. 1 – AI Act: Risk based approach

Systems at unacceptable risk are those that

- have a high potential for: manipulating people through subliminal techniques, without the knowledge of such people; or exploiting the vulnerabilities of specific vulnerable groups, such as minors or people with disabilities, in order to materially distort their behavior in such a way as to cause them or another person psychological or physical harm

- Award a social score based on AI for general purposes by public authorities

- apply “real-time” remote biometric identification techniques in publicly accessible spaces for law enforcement purposes, subject to the application of certain limited exceptions.

The implementation and use of these systems is prohibited.

High-risk systems are those intended to be used

- For “real-time” and “retrospectively” remote biometric identification of individuals

- to evaluate students in education and training institutions and to evaluate participants in the tests usually required for admission to educational institutions

- As safety components in road traffic management and water, gas, heating and electricity supply

- for the recruitment or selection of individuals or to make decisions on the promotion and termination of contractual labor relations, for assignment of tasks, and for monitoring and evaluation of performance and behavior by or on behalf of public authorities to assess the eligibility of individuals for public assistance benefits and services, and to grant, reduce, withdraw, or recover such benefits and services

- To assess the creditworthiness of individuals or to establish their creditworthiness (with some exceptions)

- to send emergency first-aid services or to prioritize the sending of such services from law enforcement, such as polygraphs or to detect a person’s emotional state, to detect deep fakes, to avert the recurrence of crimes or to ascertain and prosecute crimes through profiling activities, to verify the criminal traits or characteristics of individuals or groups, for the analysis of hidden correlations among data useful for criminal analysis activities

- In the field of migration, asylum, and border control by relevant activities, such as verifying the authenticity of documents

- to assist a judicial authority in researching and interpreting facts and law and applying the law to a concrete set of facts.

For these systems, the Regulations establish a number of obligations:

- The performance of a risk analysis and the implementation d measures for their mitigation

- The assurance of high data quality, with the aim of minimizing risks of discrimination

- The logging of activities that involved the use of the system to ensure traceability of results

- Detailed documentation of the system and its purpose of use

- An adequate level of human supervision

- Transparency to those subject to the application of the system

- Compliance with robustness, accuracy and cybersecurity criteria.

Then there are systems that are not considered high risk, but for which transparency requirements still apply; these are essentially the systems that

- interact with human beings

- Are used to detect emotions or establish an association with (social) categories based on biometric data

- generate or manipulate content (“deep fakes”).

For systems found by the risk analysis not to make use of artificial intelligence techniques or , while still making use of these techniques, they did not have significant risk profiles, no special measures are provided. The Regulations nonetheless suggest certain precautions.

The above is not exhaustive of all the guidance in the Regulations. For a comprehensive understanding of the AI Act, please refer to the European Community page

how to supervise AI

The regulatory requirements mentioned above, as well as the concrete need, beyond regulatory obligations, to manage a resource that is increasingly critical for the success and survival of an organization, call for the establishment or consolidation of an AI oversight based on some fundamental principles.

Cataloguing of available AI assets

Each AI system must be surveyed, categorized, and qualified with respect to characteristics that enable its classification with respect to risks.

Governance

- Processes: it is necessary to define specific processes for managing the life cycle of AI systems, from requirements definition, implementation, operation, and decommissioning

- Roles and responsibilities: it is necessary to establish which roles are involved in the processes of implementation, management, and use of AI systems; some of them refer to individual systems (e.g., the Owner or the User), while others perform coordination, direction, and monitoring functions within the perimeter of the entire AI governance system; for each of these roles, responsibilities must be defined along the articulation of the processes mentioned in the previous point; the roles must then be formally and unambiguously assigned to individuals and/or organizational units in the organization

- Metadata: we use this term by extension, identifying with it the information that structurally identifies and describes Systems, Roles, Processes, and all other entities at play in the AI management system

- Performance indicators: indicators capable of representing the status and evolution of the artificial intelligence management system must be defined and periodically measured or calculated; citing, by way of example only, the percentage of AI systems for which full cataloging has not been done, or the percentage of high-risk systems

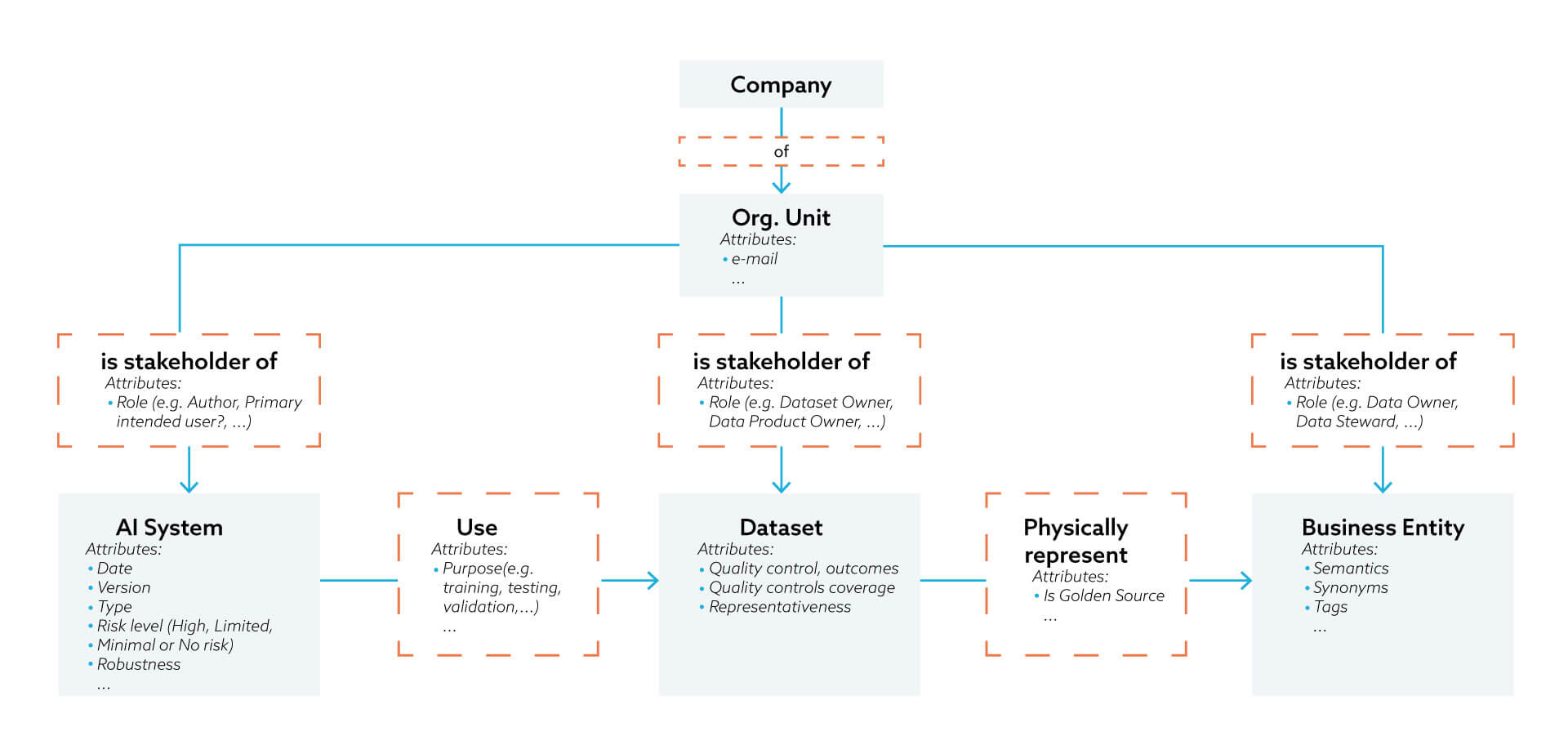

Integration with governance and data management

Many of the AI requirements, whether regulatory or dictated by internal organizational criteria, have as an enabler the data used, for example, for training, testing, and validation of AI systems; for this reason, it is important to document which datasets have been used for this purpose, which stakeholders within the organization are in charge of managing these datasets, and what quality controls are applied to them; often the roles envisaged by the AI management system are assigned to the same individuals or organizational units as those envisaged for the data governance system; the “metadata” model shown in Figure 2 represents an absolutely non-exhaustive example of what the entities to be overseen in an integrated “Data & AI Governance” system might be.

Fig. 2 – Data & AI Governance, Metadata Model

Flexibility, Dynamism

The data, processes, governance model, roles, and responsibilities of the AI management system must be submitted to review, additions, and changes over time, for reasons both external (although the AI Act is consolidated in its basic features, it may still undergo tweaks before it goes into effect and probably even thereafter) and internal to the organization (e.g., reorganization interventions); for this reason, the AI management system must ensure that all of its constituent elements, starting with the metadata model, can evolve over time.

Irion for AI Governance

Irion’s enterprise data management (EDM) platform provides an integrated set of capabilities covering all the principles outlined above.

The Open Data Governance module ensures the extension of the metadata model to all the entities, relationships and attributes needed to represent the AI management system; an AI Systems Catalog can be built and maintained, integrated with metadata from the Data Governance system, if available, processes can be configured, roles defined and assigned to the stakeholders involved, and indicators collected and calculated.

To the descriptive capabilities of the System, supported by a powerful graphical engine based on the principle of Knowledge Semantic Graphs, Irion combines active functionalities, such as orchestration of configured processes, implementation and execution of data quality and cataloging rules for AI systems, management of authorization profiles, and integration with other systems in the organization’s system landscape, through the exposure of APIs and a catalog of more than 200 connectors to different information sources and systems.

Contact us for more information

Mauro Tuvo has over 30 years of experience in supporting Italian and European organizations in Enterprise Data Management, focusing on Data Quality, Data Governance and Compliance (GDPR, IFRS17, Regulatory Reporting). He is the Principal Advisor at Irion, author and university lecturer with significant contributions in the field. He is also a member of technical committees and observatories related to data management.