The customers can focus on their objectives relying on the platform’s functionality to prepare, optimize, and execute all the necessary steps. The central role of data and the declarative paradigm are at the core of all our technologies.

Assumptions and Reasons – Our everyday life is “declarative”!

Every day, in various daily activities and needs, we behave and interact with other people according to a declarative principle. We communicate what we need and receive the result of what we request, commission or order. We do not specify, therefore, how to carry out the given task, we do not teach others their work, so to speak. We simply declare what should be done and what objectives should be pursued and achieved. Finally, we evaluate the results and consequently measure the effectiveness of the actions taken.

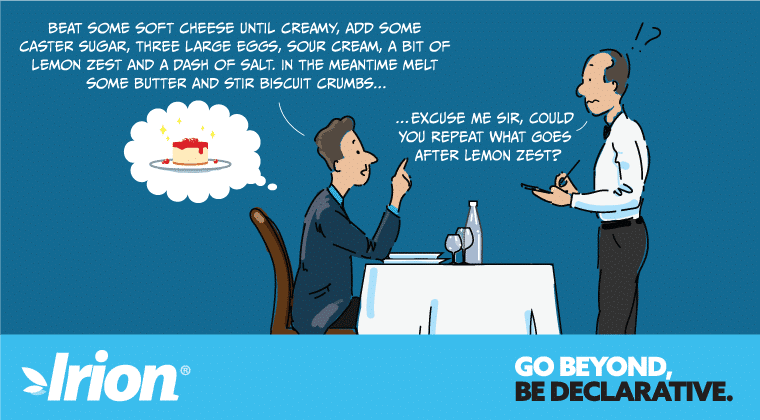

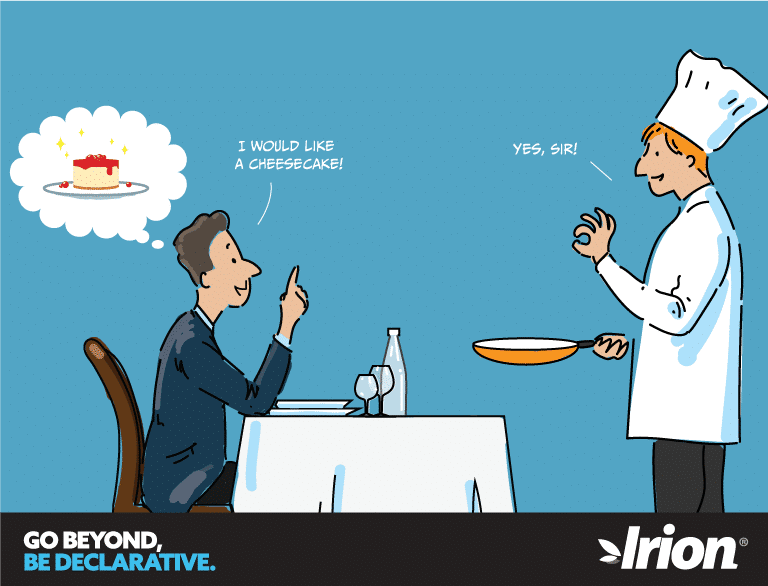

Who of us, ordering a dessert, would tell the waiter to tell the chef to beat some soft cheese until creamy, adding some caster sugar, three large eggs, sour cream, a bit of lemon zest and a dash of salt? and in the meantime to engage the assistant cook to melt some butter and stir biscuit crumbs into it to form a crust? It is much better to simply say: “I would like a cheesecake” and let the chef astonish us with a dessert that is very good today (on average much better than my recipe) and excellent tomorrow when he further improves the recipe by testing and re-testing it like a real expert! We don’t need to define the chef’s task step-by-step, yet we are guaranteed it will be done perfectly. In the meantime, we may as well focus on choosing the drink to perfectly match the dish – and our tastes!

There are multiple reasons for this type of behavior in everyday life:

- It is an undeniable fact that we are not experts in everything. The result is often better if we delegate to others at least part of the project.

- Doing a job well requires concentration. Any dispersion takes time and energy away from other tasks.

- The most effective way to solve complex problems is to divide them into simpler parts and delegate the necessary work.

- Only those who know a problem well can solve it adequately. Only those who continuously invest in research and accumulate experience can, over time, take the right steps for constant and necessary improvement.

In the end, we all act according to the effective principles of delegation, focus and isolation.

To do so, we don’t need to be prescriptive, nor go into every detail of the norms and rules to follow in carrying out a task. What we need is to be descriptive, limiting ourselves to correctly and exhaustively articulating the expected result.

This seemingly simple observation can also be transposed and practiced effectively for advanced Data Management solutions. In quite the same way, their realization requires a declarative approach and a platform that supports it.

Data Management: traditional ETL-based approach

In computer science, we remain too often at procedural level, prescriptive to the detail. We instruct applications even on basic functions instead of leveraging on specially designed optimized software.

The problem is due to the educational background of industry specialists. Approaches common in traditional training fail to break down a problem. Yet it is more efficient to isolate delegable aspects and reorganize it by replaceable components.

The majority of developers define detailed algorithms to solve the problems.

Declarative models and languages are aimed to describe the expected result rather than the steps necessary for its achievement. However, few know their meaning and implicit potential.

In IT, declarative languages are often underestimated, or receive limited application in procedural use. Those familiar with the subject know that very few specialists, even if they know a declarative language such as SQL, have really learned its potential in terms of data management and transformation.

A relational DB can run very complex processing while minimizing data transfer, with parallel algorithms strongly optimized at low level and automatically run-time adapted to the statistical distribution of data. However, developers rarely fully exploit its functionality.

On the applicational level, we can see that nearly all ETL data management systems are deceptively simplified by this school of thought. They implement an imperative and procedural paradigm for which the programmer must in detail “instruct” the system to blindly follow the algorithm called “design-time”.

Old and traditional ETL systems process data in streaming, one row at a time. Their logic leaves no room for future optimizations of the algorithm. Even the new versions of the software will be strictly constrained to perform all the detailed steps already programmed.

And not only. ETL-oriented computing models that aim to process one record at a time immediately collide with ordinary functional needs. In reality, it is often necessary to make transformations involving multiple records, to calculate aggregates, analyze time series, reuse pre-processed partials several times, etc.

Even the most renowned ETL systems tend to degenerate into an incoherent jumble of transformations and improvised SQL calls to place temporary data in some staging area and turn to the DBs to make up for their poor functional capabilities.

All ETL systems available on the market are based on the conceptual architecture that:

- does not follow a declarative model of functional delegation

- imposes a detailed description of the process, does not allow optimizations of processing, neither concerning current data distribution nor in response to software improvements

- is not autonomous in terms of functional potential and often has to resort to external support systems

- moves masses of data over and over again from staging areas to processing servers and vice versa to perform the processing logic.

These scenarios, as common as they are inauspicious, result in:

- increasingly complex orchestration of transformation paths

- the need to define view tables and various support infrastructures using other instruments and uncoordinated ways

- overrunning of costs and implementation time

- reduced computational performance

- increased costs of maintenance and change management

- the impossibility of parallel and coordinated developments and test cycles

- the almost total impossibility of documenting and tracing processes. With all due respect to the requirements of lineage and repeatability.

Data Management: Irion EDM – the integrated declarative platform for the most complex data management processes

Irion’s Enterprise Data Management Technology is the result of years of experience in mission-critical and data-intensive contexts. We developed this platform based on hundreds of projects: those with lots of data, with time constraints, with specifications that change in the course of work, with complex problems in contexts of highly articulated architecture and organization.

Our approach is “disruptive“: we do not propose yet another procedural ETL system with some extra function, some specific connector, a nicer editor and ‘magic’ engines for fast execution.

ETL processes are a variation of Data Management, which also covers many other areas (Rule-Based Systems, Data Quality, Data Integration and Reporting, Data Governance etc). We have redefined the concept of Data Management by adopting the declarative paradigm and making it prevail in technology and methodology.

One of our main technologies is actually called DELT™, which stands for Declarative Extraction Loading & Transformation. This acronym also represents the inversion of the loading and transformation phases (ELT versus ETL). With this technology, the entire process is carried out in accordance with a declarative model.

A DELT™ Execution is based on the following key concepts:

- Each dataset used in processing is repositioned virtually as if it were a table or a set of tables whose contents cannot be changed after they were created. This ensures the system’s consistency, efficiency and controllability. The task of the platform is to perform implicitly all the transformations necessary to properly map a dataset, available in any format.

- The entire processing is broken down into a set of “data engines”. These are functions that receive as input one or n virtual tables, perform the appropriate transformations and produce as output one or more virtual tables according to the model shown.

- The functions can be of many types (Query, Script, Rule, R, Python, Masking, Profiling, etc.). They perform computations as configured by whoever sets the solution, encapsulating various models and kinds of logic.

- The configuration of data engines involves the implicit definition of an oriented graph of execution dependencies. It enables the system to autonomously organize the sequence of steps necessary to produce each output, so the designer doesn’t have to describe the algorithm.

- Each object in the system can be run individually and/or in a combination with others. This facilitates its testing and maintaining, as well as refactoring and incremental development.

- The DELT™ processing follows a declarative scheme that specifies the datasets expected in the output. It is the task of the system to automatically detect the “DELT™ Graph” verifying that it is acyclic, and initiate the optimized and parallel execution of all the steps necessary to produce the required result.

- In very complex cases the engine is able to modify the graph during execution according to the dynamic properties that determine it (they can also be influenced by previous steps); or even to generate and execute runtime the Data Engines necessary for specific computations.

- If necessary, a DELT™ Execution can engage other DELT™ Executions for the purposes of encapsulation and reusability of the business logic.

- IsolData™ is the proprietary technology used for making the data involved in an isolated and managed space available to DELT™ Execution. It is a virtually unlimited workspace, dynamically allocated and freed by the system, segregated in terms of access permissions and namespace, volatile or persistent.

- IsolData™ allows execution in parallel n DELTs™ on the same or on completely different data and/or using different parameters, rules and logic, without the need to predefine or manage any particular structure. The framework coordinates and manages all the support operations for the infrastructural management in an optimized and transparent way.

- During a DELT™ execution, all data processing operations are performed at a low level on the server and in the set-oriented mode, i.e., considering an arbitrarily complex set of datasets at the same time and not “one line at a time”.

- The server minimizes costly data movement operations by running only once the possible complex computations to produce intermediate data for multiple uses, without the need to explicitly save them in files or backup tables.

- Knowing a set of statistics on the data allows the engine to perform specific optimizations as the engines are running.

- Every DELT™ Execution allows to develop a complex process interactively and incrementally, making visible all the intermediate results. It also enables full and intelligible tracing of data and rules involved in each computation, for example for Audit purposes (see Automatic Documentation and OneClick Audit™®).

A declarative approach to Enterprise Data Management appears much more compliant to a common way of thinking. It responds to the principle of delegation of duties, guarantees unparalleled levels of performance, allows automatic optimization and continuous improvement, enables Agile and incremental implementation of the solutions. Finally, it is not a black box, but can give an account of its work.