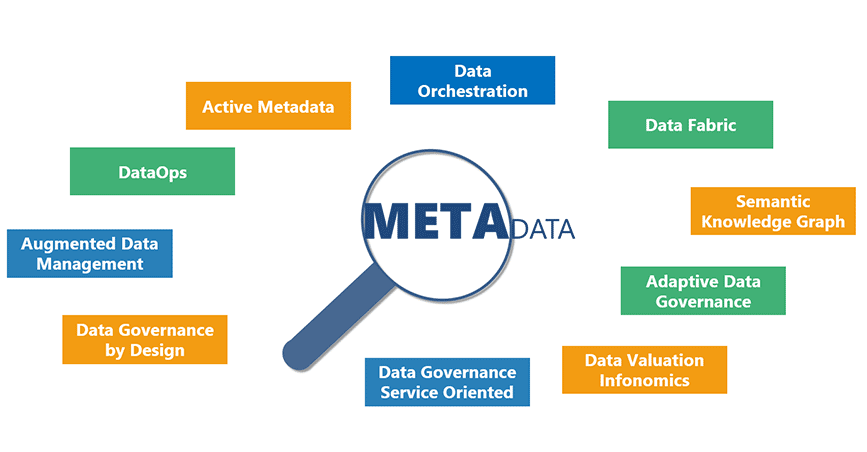

In October, Irion was invited to speak at the Annual Data Driven Banking in the next normal banking conference, in the TO BE Session of the Data Day, organized by ABI Servizi. We explained why metadata are at the center of hype and best practices in data management.

These are some of the areas that need and generate metadata:

- Data Management paradigms and design concepts,

- methodologies for developing and managing data-intensive solutions,

- operational styles of Data Governance currently in use, or indicated by analysts as reference models.

Let us have a brief overview of them..

Service-oriented Data Governance

This is a paradigm of Data Governance management. It aims at highlighting for the company its possible contribution to the assets and objectives of as many as possible company actors. This concept is embodied in the catalog of Data Governance services. It includes information services, analysis, monitoring and control models. They can be used for:

- formulating and implementing a digital strategy. They provide useful information to assess the sustainability of actions as well as the availability and accessibility of the data assets necessary for reaching the strategic objectives;

- designing a control system to apply to a certain information area. It will monitor how the controls cover the purposes of use of data;

- identifying the golden copy, the information source that contains the official version of certain information for use in an analysis or in a report.

All these services and others that may become necessary over time are enabled by metadata and the applicable techniques. These include Data Lineage, coverage of control systems, mapping of business concepts in computer systems and so on.

Service-oriented Data Governance uses metadata.

Data Governance by design

This practice should be applied in all project or management activities involving the company’s data. It means considering all the obligations that guarantee data management: ownership, lineage, semantics, quality, safety, and so on. For example, the project team of a new computer system takes into account the standard concepts in the company’s business glossary. They refer to these concepts in designing new structures. And new system-specific concepts are qualified in terms of ownership, definition/meaning, validation rules.

Data Governance by design uses and produces metadata.

Adaptive Data Governance

The Adaptive Data Governance model can be expressed through different styles. Their choice depends on the purposes, characteristics, the company’s maturity level, and the specific context of application.

A practical example can make this concept clearer. The Data Governance practices are necessary to ensure compliance with regulatory requirements in the banking sector. They initially focused on data quality. Due to the evolution of these requirements, the banks now have to also cover other governance aspects, such as Data Lineage. But this is only the beginning. Regulatory compliance is no longer the only reason to oversee data assets. Now the purpose is also to qualify and oversee them to make the best use of their full potential value. Besides, in the governance perimeter we no longer manage only the data used for reporting and accounting to supervisory bodies. The volumes, latency, nature and types of the governed data change. For these reasons, the Data Governance style must adapt to these new purposes and types of information. Consequently, the metamodel that supports Data Governance is extended with new entities, attributes, relationships. A simple example was the introduction of the metadata needed to manage the compliance with the European Regulation for the protection of personal data. It introduced the Category of personal data. However, the need to be Adaptive challenges the company to evolve further. The IoT, big data, cloud and hybrid environments, metadata driven Data Management systems brought about new types of metadata: active metadata, latencies, channels, architectures.

In Adaptive Data Governance, different styles of Data Governance require the availability of different metadata.

Data Fabric

Data assets are growing exponentially and in multiple dimensions. Organizations will face, or already face the challenges of managing them effectively and sustainably. In view of these challenges, Gartner defines the design concept of Data Fabric.

- The available data are growing in volume. The sources they come from are more and more numerous and diverse. They also have heterogeneous natures and life cycle speeds;

- Cresce la domanda di informazioni da parte di categorie di data consumer umani e “tecnologici” sempre più numerose (business analyst, data scientist, data engineer, modelli “autonomi” di analytics, Ai, ML, …)

As a result, Gartner has rationalized in the Data Fabric concept a series of capabilities, organized into pillars. This is an ideal model, not yet fully implemented in practice. Still, it is establishing itself as a reference for companies and vendors in the sector. At least three of the closely interconnected pillars defined by Gartner relate directly to metadata:

- Augmented Data Catalog: a catalog of available information with distinctive features. These aim at an active use of metadata to maximize the efficiency of Data Management processes;

- Semantic Knowledge Graph: a graphical representation of the semantics and ontologies of all entities involved in data asset management. Naturally, the basic components represented in this model are metadata;

- Active metadata: analyzing these metadata helps to find opportunities for easier and more optimized processing and use of data assets. These include log files, transactions, user logins, query optimization plans.

Metadata are the foundation of the Data Fabric concept. According to Gartner, it is the reference for the future of Data Management.

Data Valuation

Data Valuation receives more and more interest. This discipline studies and estimates the value of a company’s data assets. The aims of Data Valuation are:

- provide support to assessing priorities and budgets in terms of data and intensive processes;

- establish the data’s commercial value for partners / competitors (data monetization);

- revidence the contribution of data governance to the enterprise value.

You can calculate the Data Valuation metrics based on the various contributions to the data cost and value. Those are measured along a data production chain that can be represented structurally through a metadata model. Once calculated, these metrics can enrich the model. They are collected as attributes of the cataloged data.

Data Valuation uses and produces metadata.

How to get ready, and why

The described concepts, practices and methods can sustain, now or in the future, a series of technological, organizational and cultural challenges. To our knowledge, today there are no companies able to apply them practically, nor are there technologies capable of supporting them completely. It is certain, however, that it is not possible to manage them without using and producing the respective metadata.

The companies interested in valueing or adopting one or more of these models can prepare now, acting on three levels:

Methods

Begin to structure and model the characteristics of application solutions and data-intensive processes..

- Start to represent requirements, functional and technical features through a set of structured metadata interconnected in a semantic model. Do so both while implementing and maintaining data-intensive solutions..

Tools

Check if your architecture has these features and eventually integrate them:

- metadata driven data management components / platforms;

- metadata modeling tools that can define and manage highly configurable semantic models. Make sure you can adapt them in time in terms of entities, attributes, and relationships.

Competencies and culture

- Check what specific skills are available in metadata management, conceptual and logical data modeling, agile project management methodologies. Eventually, strengthen and develop them;

- promote educational initiatives on the principles of using metadata, reading data models, understanding and expressing requirements in a structured form. This can occur in the broader context of data literacy programmes.

Want to learn more?

Contact us! We will provide you with illustrative examples of how other organizations have already started their transformation.